More surfers, more data and more

services: the web is increasing by leaps and bounds, made possible by new

technology and improved coding. We look beyond orizon to see what lies ahead

for the Internet

The generation that has learnt to surf the

net before they can even walk is already among us. Accessing the Internet is as

natural to them as breathing; an integral and integrated part of life. It would

be great if the future of the Internet is shaped like this: Accurate, Fast and

Secure. And only those technologies that can keep up with the growth of the

Internet can guarantee this. Some of these are being implemented today, but

they'll need time. Expansion of existing IP addresses with the introduction of

the IPv6 protocol has been going on for many years. And the introduction of the

Domain Name System Security Extensions (DNSSEC), which ensures the authenticity

of web addresses, will also take a while. In the meantime, new undersea cables

through the north-west passage of the Arctic are also being planned. These will

reduce the response time of a link to Japan by 60 milliseconds. The Internet of

the Future will not happen in just one massive explosion, and laying down miles

of cables is not the only solution to effectively direct the continuously

increasing data flow to the surfer. Instead, a mosaic of new software

technologies at different levels from the data centre of the browser will be

headlining the biggest changes. The new horizon for the Internet is arriving,

and we'll be looking beyond it to see what may very well be the Internet you'll

experience next year.

The

Internet Of The Future

Better control in data flow

To increase the speeds of processing

and sending Petabytes, one must uncouple software from hardware. Google shows

us how it can be done

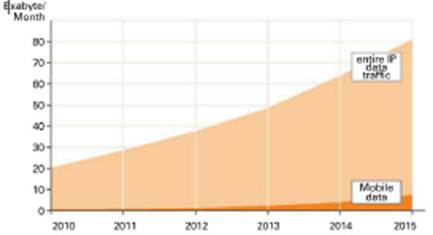

The International Telecommunication Union

of the UN reported that one-third of the world's population was online in 2011,

and the bandwidth of the web on an average amounted to 90,000 gigabytes per

second. That is exactly 30 Exabyte in a month, and counting (refer to graph on

right). The debatable point here is how one can effectively control such a high

data flow. Google has now demonstrated this to large network suppliers like

Cisco and Juniper. This is hardly surprising: communication between Google's

data centres and web surfers themselves amounts to 6 to 10 percent of the

entire global traffic, according to a report by Arbor Network. As Google would

like to be the number one provider, it would not stay silent on the volume of

traffic between its data centres. At the Open Network Summit in April, Google

allowed a tiny insight into how one regulates the traffic between centres. It

came as a huge surprise since the concern had evidently said goodbye to customary

network architecture.

It is the norm for providers to expand

their network capacities by going to large suppliers and purchasing their

hardware along with the corresponding software. However, for the hardware,

Google directly negotiates with Chinese manufacturers and links the router and

switches via a Software Defined Network (SDN), which is controlled by the

OpenFlow protocol. This allows the administrator to centrally control the path

of the data packets in his network and avoid bottlenecks. He can assign greater

priority to backups, email traffic or video streams if necessary. Google

requires flexible control over the data flow because its internal network needs

to more many Petabytes of data in short notice. SDN will be taking over all

providers in the long term.

Who has the fastest file system in the

world?

The web carries out an increasing number of

tasks besides data transferring. The same applies to cloud services for

computers and data storage. For example, the EC2 cloud by Amazon accounts for

one percent of the entire Internet traffic. It stored an amount of 762 billion

data sets (Objects) last year and had processed 500,000 tasks per second. Only

file systems can ensure data integrity under high pressure; the administer the

metadata (name, size, date) separately from the intrinsic file content. The

Hadoop Distributed File System (HDFS) found in Facebook, Yahoo! and in the EC2

cloud will automatically create several copies and has a server at each node -

this siver is employed to only manage the metadata (refer right). Thus, it is

possible to manage many parallel accesses to Petabytes of data. This makes the

open-sourced file system HDFS one of the fastest in the world.

Forecast: steadily rising web traffic

The Visual Networking Index by Cisco supplies

the most exact data about web traffic. It forecasts a doubling for the next

three years - propelled also by mobile devices.

Forecast:

steadily rising web traffic

OpenFlow: Google's new network

OpenFlow organises the traffic between

Google's computing centers. The Open Source Technology can efficiently

distribute huge quantities of data as compared to the usual software for

routers and switches.

OpenFlow:

Google's new network

HDFS: A file system for petabytes

Only distributed file systems like the HDFS

can manage thousands of simultaneous accesses to huge quantities of data;

special servers are responsible for this administration.

If a file needs to be stored, the master

server seizes the file's metadata (name, size, etc.) 1 and the contents are written

on a data server 2. After that, the master server 3 gives the instruction for

reflecting the data contents in another rack 4.

HDFS:

A file system for petabytes

Stable Protocol for connections

No website arrives to the browser

without HTTP. Still, the protocol largely inefficient. Its successor is faster

by at least 50 percent

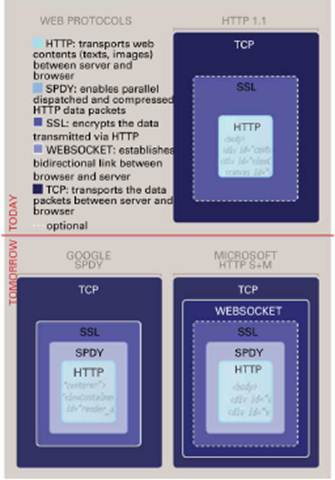

The Hypertext Transfer Protocol (HTTP), the

cornerstone of Internet communication, is pretty much out-of-date. The last

version (1.1) came out 13 years ago. As a complement to the TCP transport

protocol, which packs the data in individual packets, HTTP instead concerns

itself with requesting the contents of a website from the server and it

prescribes the way in which the elements of a website are dispatched. For this

purpose, HTTP 1.1 allows only one request per TCP connection. Thus, what

happens is that all the elements of a website (text, images and scripts) are

dispatched individually one after the other. Current browsers circumvent this

restriction by establishing six parallel TCP connections. That is still not

truly efficient enough, as one server can accommodate every additional

connection with a delay of 500 milliseconds, and it also sends along a new

redundant HTTP-Header with every connection - more data than it should

necessarily transfer. And moreover, the headers are dispatched in an

uncompressed manner. Furthermore, the protocol allows only the client to ask

questions. Even if the server itself knows that it should actually dispatch

more data still to the client, it must wait until the client requests the same.

HTTP also does not provide encryption. That is why additional protocols like

SSL are used.

Google and Microsoft Developing HTTP 2.0

The IETF (Internal Engineering Task Force)

wants to address the many disadvantages surrounding HTTP 1.1, and plans to

introduce Version 2.0 next year as a standard. It is to be decided this year as

to which technology will be employed. Google and Microsoft have respectively

made their own proposals, and they are being considered as hot candidates.

Google has already been using SPDY for two years. This is a protocol that both

modifies and complements HTTP 1.1. Firefox, Chrome and the Silk browser on

Kindle have already integrated SPDY; likewise, all Google services like Amazon,

Twitter and the Apache web server support this technology. SPDY allows HTTP

packets to be sent in a parallel manner, compresses the data and also provides

the compulsory SSL encryption. Reports have revealed that it accelerates data

transfers by up to 50 percent.

From Microsoft's perspective, however,

Google's concept neglects the requirements of applications in mobile devices.

Microsoft wants to resolve that with the HTTP Speed+Mobility protocol. It uses

the SPDY technology for parallelisation, but at the same time allows

flexibility for encryption and compression as both requires computing power and

also reduces battery life. Microsoft prescribes this using web sockets which

establish a constant bi-directional connection between the client and server -

a concept that matches the best with those apps which continuously send data to

the web or receive data for further processing. Whichever catches on, faster

Internet surfing will most likely be implemented next year.

HTTP 2.0: Two maps for the New Protocol

The new version of the web protocol ought

to be released next year. Two competing proposals from Google and Microsoft as

to how to optimize HTTP 1.1 are on the table at the moment.

HTTP

2.0: Two maps for the New Protocol

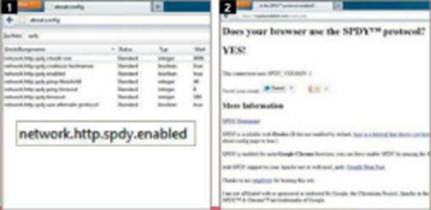

Firefox 13: Now already with HTTP 2.0

With Google's SPDY protocol, you can now

surf faster 1. It is by default activated both in Chrome and Firefox 13 2.

Users can test this on isspdyenabled.com.

Firefox

13: Now already with HTTP 2.0