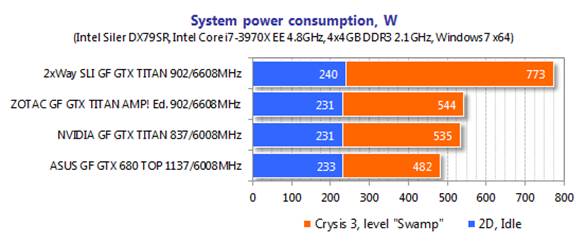

Power consumption

We measured the power consumption of our

test that is equipped with different graphics cards by multi-function Zalman

ZM-MFC3 panel can report how much power a computer (without screen) removed

from a wall outlet. There are two test samples: 2D (edit text in Microsoft Word

or surfing the web) and 3D (four runs of the scene introduction

"Swamp" in Crysis 3 game at 2,560x1,440 with maximum image quality setting,

but no MSAA)

The power consumption of Zotac GeForce GTX

Titan AMP! Edition is compared with the power consumption of the systems with

ASUS GeForce GTX 680 DirectCU II TOP and reference NVIDIA GeForce GTX Titan. We

also checked the power requirements of two slightly overclocked Titans.

Power

consumption

People who build SLI with two GeForce GTX

Titans will certainly have a 1,000 watts power of a few lines of Platinum, but

it turned out such a configuration can be easily recharged by a PSSU 800W. And

we are talking about the maximum load. In most other tests, the power

consumption of this configuration is 710 to 730 watts. Talk to the single

cards, pre overclocked Zotac just needs 11 watts more than the reference

Nvidia.

Now let's look at the performance we can

expect from the screen card that costs as much money as a high quality newest

generation 55-inch 3D TV.

Testing configuration and methodology

All participating graphics card are tested in a system with the

following configuration:

·

Mainboard: Intel Siler DX79SI (Intel x79

Express, LGA 2011, BIOS 0559 from 03/05/2013)

·

CPU: Intel Core i7-3970X Extreme Edition,

3.5/4.0 GHz (Sandy Bridge-E, C2,1.1 V, 2 x256 KBL2, 15 MB L3)

·

CPU cooling fan: Phanteks PH-TC14PE (2 x Corsair

AF140 fans at 900 RPM)

·

Heat-sink surface: ARCTIC MX-4

·

Graphics card: ZOTAC GeForce GTX TITAN AMP!

Edition 6GB, NVIDIA GeForce GTX TITAN 6GB, ASUS GeForce GTX 680 DirectCU II TOP

2GB (GTX680-DC2T-2GD5)

·

System memory: DDR3 4 x 4GB Mushkin Redline

(Spec: 2133 MHz / 9-11-10-28 / 1.95 V)

·

System drive: Crucial m4 256 GB SSD (SATA-III,

CT256M4SSD2, BIOS v0009)

·

Drive for the programs and games: Western

Digital VelociRaptor (300GB, SÂT-II, 10,000 RPM, 16MB cache, NCQ) inside Scythe

Quite Drive 3.5” HĐ silencer and cooler

·

Backup drive: Samsung Ecogreen F4 HD204UI

(SATA-II, 2 TB, 5400 RPM, 32 MB, NCQ);

·

System case: Antec Twelve Hundred (front panel:

three Noiseblocker NB-Multiframe S-Series MF12-S2 fans at 1020 RPM, back panel:

two Noiseblocker NB-BlackSilentPRO PL-1 fans at 1020 RPM, top panel: standard

200mm fan at 400 RMP

·

Control and monitoring panel: Zalman ZM-MFC3

·

Power: Corsair AX1200i 1,200W (with a default

120mm fan)

·

Monitor: 27” Samsung S27A850D (DVI-I, 2,560x1,440,

60Hz)

Let me remind you two different samples

looks like:

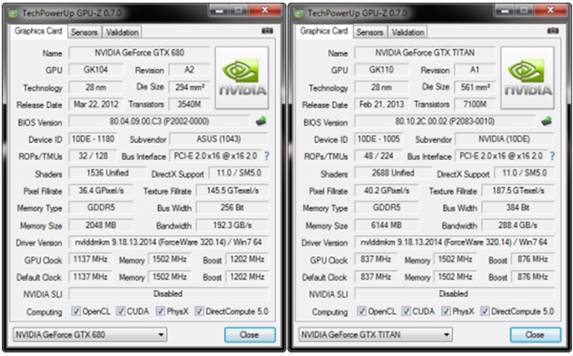

ASUS

Direct CU II and Nvidia GeForce GTX Titan

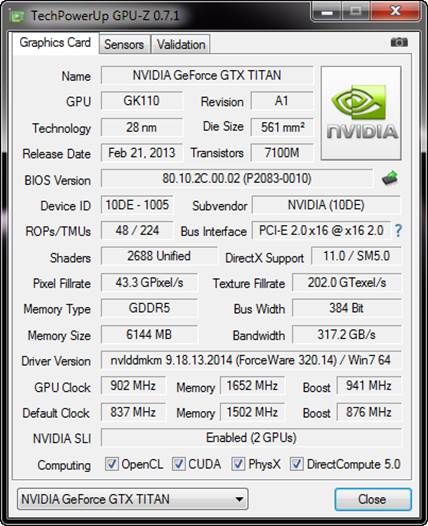

Two

NVIDIA GeForce GTX versions

I also want to add that we do not have any

issues (related to hardware and software) to build 2-way SLI graphics

configurations card with two GeForce GTX TITAN cards.

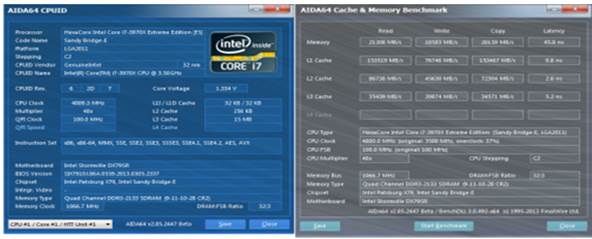

32nm

six-core CPU

BCLK

frequency sets at 100 MHz

To reduce the dependence of the graphics

card performance on the overall platform speed, I overclocked 32nm six-core CPU

with our 48x multiplier, BCLK frequency sets at 100 MHz and "Load-Line

Calibration” activates up to 4.8 GHz. The Vcore processors increase to 1.38 V

in BIOS motherboard.

The

Vcore processors increase to 1.38 V in BIOS motherboard

Hyper-Threading Technology is enabled. 16

GB DDR3 system memory operated at 2,133 GHz frequency with 9-11-10-28 timing

and 1.65V.

Testing started on May 21, 2013. All tests were performed on

Microsoft Windows 7 Ultimate x64 SP1 with all necessary updates as the day on

the following drivers:

·

Intel Chipset Drivers 9.4.0.1017 WHQL from

27/03/2013 for mainboard chipset

·

DirectX End-User Runtimes library from

30/11/2010

·

NVidia GeForce 320.14 Beta driver from

13/05/2013 for Nvidia based graphics card.

We tested the graphics card performance in

two resolutions: 1,920x1,080 and 2,560x1,440. Testing was conducted in two

image quality modes: "Quality + AF16x" - default textures quality in

the disk with 16x anisotropic filter enabled and "Quality + AF16x + MSAA

4x (8x)" with 16x anisotropic filtering enabled and full-screen 4x or 8x

antialiasing, when the average fps speed is high enough for comfortable gaming

in these cases. We allow anisotropic filtering and full screen antialiasing in

the game setting. If the compatible options are shortage, we will change these

settings in the Catalyst’s Control Panels and GeForce drivers. Here we also

disable Vsync. There are no any changes in the driver settings.

Our benchmark includes our two popular

semi-synthetic benchmarks and ten resource consumption games of the genres:

·

3DMark 2013

(DirectX 9/11) – version 1.0, benchmarks in “Cloud Gate”, “Fire Strike” and

“Fire Strike Extreme”;

·

Unigine Valley Bench (DirectX 11) – version 1.0, maximum image quality settings, AF16x

and (or) MSAA 4x, 1980x1080 resolution;

·

Metro 2033: The Last Refuge (DirectX 10/11) – version 1.2, maximum graphics quality settings,

official benchmark, “High” image quality settings; Tesselation technology, DOF

and MSAA4x disabled; AAA aliasing enabled, two consecutive run of the

“Frontline”;

·

Total War: Shogun 2: Fall of the Samurai (DirectX 11) – version 1.1.0, built-in benchmark (Sekigahara battle)

at maximum graphics quality settings and enabled MSAA 8x in one of the test

modes;

·

Battlefield 3 (DirectX

11) – version 1.4, all image quality settings set to “Ultra”, two successive

runs of a scripted scene from the beginning of the “Going Hunting” mission 110

seconds long;

·

Sniper Elite V2 Benchmark (DirectX 11) – version 1.05, we used Adrenaline Sniper Elite V2

Benchmark Tool v1.0.0.2 BETA with maximum graphics quality settings (“Ultra”

profile), Advanced Shadows: HIGH, Ambient Occlusion: ON, Stereo 3D: OFF, two

sequential test runs;

·

Sleeping Dogs (DirectX

11) – version 1.5, we used Adrenaline Sleeping Dogs Benchmark Tool v1.0.0.3

BETA with maximum image quality settings, Hi-Res Textures pack installed, FPS

Limiter and V-Sync disabled, two consecutive runs of the built-in benchmark

with quality antialiasing at Normal and Extreme levels;

·

Hitman: Absolution (DirectX 11) – version 1.0.446.0, built-in test with Ultra image

quality settings, with enabled tessellation, FXAA and global lighting;

·

Crysis 3 (DirectX

11) – version 1.0.1.3, all graphics quality settings at maximum, Motion Blur

amount – Medium, lens flares – on, FXAA and MSAA4x modes enabled, two

consecutive runs of a scripted scene from the beginning of the “Swamp” mission

110 seconds long;

·

Tomb Raider (2013) (DirectX 11) – version 1.1.732.1, all image quality settings set to

“Ultra”, V-Sync disabled, FXAA and 2x SSAA antialiasing enabled, TessFX

technology activated, two consecutive runs of the benchmark built into the

game;

·

BioShock Infinite (DirectX 11) – version 1.1.21.26939, we used Adrenaline Action

Benchmark Tool v1.0.2.1, two consecutive runs of the built-in benchmark with

“Ultra” and “Ultra + DOF” quality settings.

·

Metro: Last Light (DirectX 11) – version 1.0.2, we used built-in benchmark for two

consecutive runs of the D6 scene. All image quality and tessellation settings

were at “Very High”, “Advanced PhysX technology was enabled, we tested with and

without SSAA antialiasing.

If the game allows

recording the minimum fps record, they were added to the charts. We ran each

test game and got the best results for the chart, but the difference between

them does not exceed 1%. If it exceeds the 1%, we run the test again to achieve

match result.